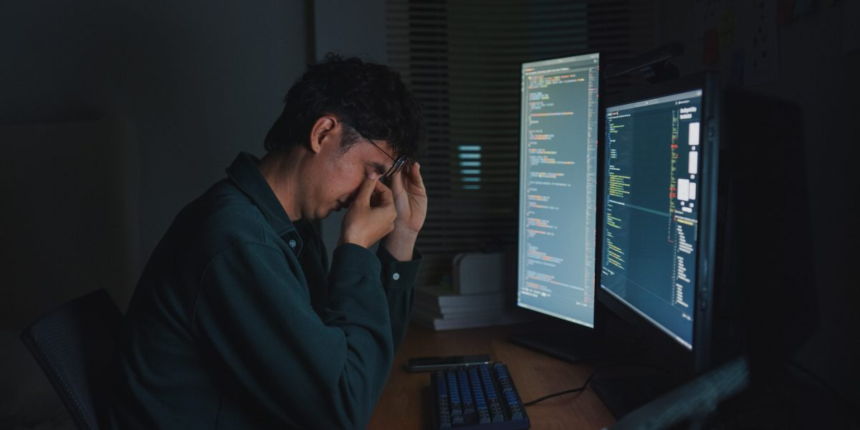

But where it gets interesting is what the NANDA study said about the apparent reasons for these failures. The biggest problem, the report found, was not that the AI models weren’t capable enough (although execs tended to think that was the problem.) Instead, the researchers discovered a “learning gap—people and organizations simply did not understand how to use the AI tools properly or how to design workflows that could capture the benefits of AI while minimizing downside risks.

Large language models seem simple—you can give them instructions in plain language, after all. But it takes expertise and experimentation to embed them in business workflows. Wharton professor Ethan Mollick has suggested that the real benefits of AI will come when companies abandon trying to get AI models to follow existing processes—many of which he argues reflect bureaucracy and office politics more than anything else—and simply let the models find their own way to produce the desired business outcomes. (I think Mollick underestimates the extent to which processes in many large companies reflect regulatory demands, but he no doubt has a point in many cases.)

This phenomenon may also explain why the MIT NANDA research found that startups, which often don’t have such entrenched business processes to begin with, are much more likely to find genAI can deliver ROI.

The report also found that companies which bought-in AI models and solutions were more successful than enterprises that tried to build their own systems. Purchasing AI tools succeeded 67% of the time, while internal builds panned out only one-third as often. Some large organizations, especially in regulated industries, feel they have to build their own tools for legal and data privacy reasons. But in some cases organizations fetishize control—when they would be better off handing the hard work off to a vendor whose entire business is creating AI software.

Building AI models or systems from scratch requires a level of expertise many companies don’t have and can’t afford to hire. It is also means that companies are building their AI systems on open source or open weight LLMs—and while the performance of these models has improved markedly in the past year, most open source AI models still lag their proprietary rivals. And when it comes to using AI in actual business cases, a 5% difference in reasoning abilities or hallucination rates can result in a substantial difference in outcomes.

Finally, the MIT report found that many companies are deploying AI in marketing and sales, when the tools might have a much bigger impact if used to take costs out of back-end processes and procedures. This too may contribute to AI’s missing ROI.

The overall thrust of the MIT report was that the problem was not the tech. It was how companies were using the tech. But that’s not how the stock market chose to interpret the results. To me, that says more about the irrational exuberance in the stock market than it does about the actual impact AI will have on business in five years time.

With that, here’s the rest of the AI news.

Jeremy Kahn

jeremy.kahn@fortune.com

@jeremyakahn