Why the buzz? Two big reasons: For one thing, AI’s attention span has historically been short. In the early days of ChatGPT, models could stay on task for only a few seconds. That horizon stretched to minutes for better models, then to hours. The Cursor project claims to be one of the first times an AI system has sustained a complex, open-ended software project for an entire week without human guidance.

The researchers found that the answer was mostly yes. Cursor’s experiment orchestrated hundreds of agents into something like a software team. It had “planners,” “workers,” and “judges” coordinating across millions of lines of code. This hints at what both Cursor and OpenAI say is a near future in which AI doesn’t just assist employees, but takes on entire projects. That would fundamentally reshape how complex work gets done—first in software development, but then in other professions.

There have been AI swarm experiments for a couple of years now. But today, Cursor says, models are smarter and can stay coherent for much longer. The models can be run at a far larger scale, with a custom layer that orchestrates hundreds of agents and keeps them from descending into chaos.

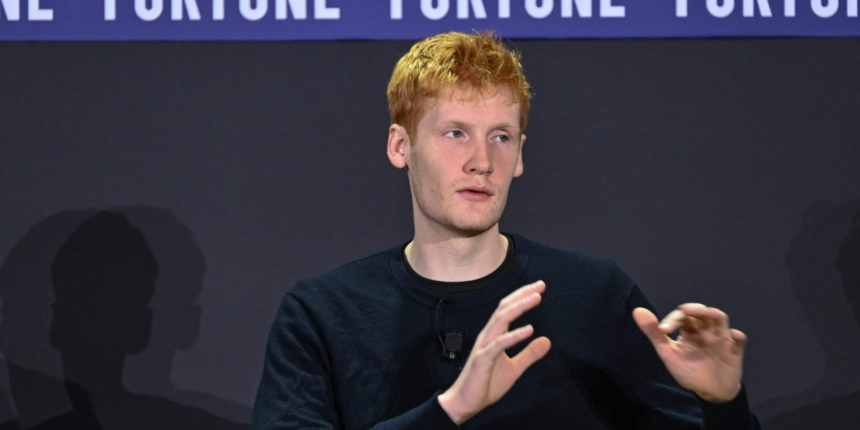

Jonas Nelle, an engineer at Cursor working on long-running AI agents, told Fortune that as AI models keep getting better, engineers and researchers need to revisit their assumptions every few months about what the AI models can do. While he admitted he “wouldn’t download it and delete Chrome today,” the browser project was “certainly better than anything models previously would have been able to do.”

These long-running agents are an important frontier, added Bill Chen, an OpenAI engineer who stress-tests and evaluates the real-world behavior of the company’s models. The length of a task, and the fact that an AI system can accomplish the task autonomously and coherently is a “very good indicator of how intelligent and how general a system is,” he said. The Cursor project, which was powered by OpenAI’s GPT-5.2, is “a direct result of us really continuously pushing forward the boundaries of model capabilities.” In the future, he said, there will be even longer horizon tests.

Still, these are not production-ready systems. Besides being buggy and incomplete, a project running swarms of agents for days or weeks is expensive. While prices have fallen steeply over the past year, long-running jobs with hundreds of AI agents can still rack up costs.

There are also security issues. An autonomous system raises worries about vulnerabilities, data leaks, and much more, and requires many new layers of control and auditability.

But Chen said he foresees a near future where something like this could be ready “for broad consumption and at a not prohibitive cost. Progress has been continuous so far, he explained, and there have been important unlocks every step of the way. For now, he said, the excitement is driven by the fact that this is a real, practical example of model capability, “versus how this model performs on academic and public evaluations and benchmarks.”