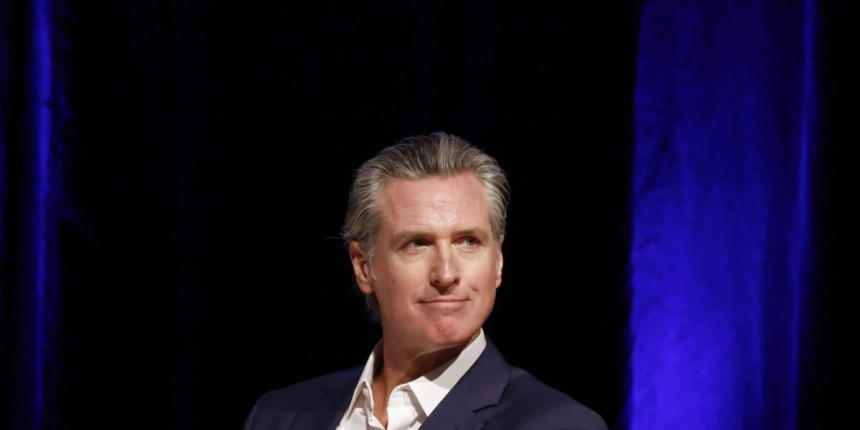

California has taken a significant step toward regulating artificial intelligence with Governor Gavin Newsom signing a new state law that will require major AI companies, many of which are headquartered in the state, to publicly disclose how they plan to mitigate the potentially catastrophic risks posed by advanced AI models.

Newsom framed the law as a balance between safeguarding the public and encouraging innovation. In a statement, he wrote: “California has proven that we can establish regulations to protect our communities while also ensuring that the growing AI industry continues to thrive. This legislation strikes that balance.”

The legislation, authored by State Sen. Scott Wiener, follows a failed attempt to pass a similar AI law last year. Wiener said that the new law, which was known by the shorthand SB 53 (for Senate Bill 53), focuses on transparency rather than liability, a departure from his prior SB 1047 bill, which Newsom vetoed last year.

“SB 53’s passage marks a notable win for California and the AI industry as a whole,” said Sunny Gandhi, VP of Political Affairs at Encode AI, a co-sponsor SB 53. “By establishing transparency and accountability measures for large-scale developers, SB 53 ensures that startups and innovators aren’t saddled with disproportionate burdens, while the most powerful models face appropriate oversight. This balanced approach sets the stage for a competitive, safe, and globally respected AI ecosystem.”

Despite being a state-level law, the California legislation will have a global reach, since 32 of the world’s top 50 AI companies are based in the state. The bill requires AI firms to report incidents to California’s Office of Emergency Services and protects whistleblowers, allowing engineers and other employees to raise safety concerns without risking their careers. SB 53 also includes civil penalties for noncompliance, enforceable by the state attorney general, though AI policy experts like Miles Brundage note these penalties are relatively weak compared, even compared to those enforced by the EU’s AI Act.

California is aiming to promote transparency and accountability in the AI sector with the requirement for public disclosures and incident reporting; however, critics like McCune argue that the law could make compliance challenging for smaller firms and entrench Big Tech’s AI dominance.

Thomas Woodside, a co-founder at Secure AI Project, a co-sponsor of the law, called the concerns around startups “overblown.”

“This bill is only applying to companies that are training AI models with a huge amount of compute that costs hundreds of millions of dollars, something that a tiny startups can’t do,” he told Fortune. “Reporting very serious things that go wrong, and whistleblower protections, is a very basic level of transparency; and the obligations don’t even apply to companies that have less than $500 million in annual revenue.”